|

I am a Machine Learning Engineer at Apple Zurich, with a focus on data curation, model evaluation and generative models in computer vision. Previously, I was a Research Scientist at Sony AI Zurich, where I managed the local team in Responsible AI. I have led research projects, and helped to scale research teams, in computer vision and related fields. My research outcomes have been published at top-tier research venues (e.g., Nature, NeurIPS, ICCV), and have been covered in MIT Tech Review, The Verge, Wired, among others. Prior to that, I did my PhD at the Video & Image Sense lab of the University of Amsterdam, under the supervision of Cees Snoek. My PhD dissertation involved visual similarity, learning with limited labels, and model biases. I was fortunate to be partially funded by multiple scholarships from NSERC. [LinkedIn] [Google Scholar] [Github] |

|

|

|

|

Alice Xiang, Jerone Andrews, Rebecca Bourke, William Thong, Julienne LaChance, Tiffany Georgievski, Apostolos Modas, Aida Rahmattalabbi, Yunhao Ba, Shruti Nagpal, Orestis Papakyriakopoulos, Dora Zhao, Jinru Xue, Victoria Matthews, Linxia Gong, Austin Hoag, Mircea Cimpoi, Swami Sankaranarayanan, Wiebke Hutiri, Morgan Scheuerman, Albert Abedi, Peter Stone, Peter Wurman, Hiroaki Kitano, Michael Spranger Nature, 2025 [paper] [project page] We operationalize ethical standards for data collection and benchmark the fairness of human-centric computer vision models. The paper has been featured on the cover of Nature! |

|

Jerone Andrews, Dora Zhao, William Thong, Apostolos Modas, Orestis Papakyriakopoulos, Alice Xiang Neural Information Processing Systems Datasets and Benchmarks (NeurIPS D&B), 2023 [paper] [arxiv] [code] We lay out considerations and recommendations for responsible curation of computer vision datasets. |

|

William Thong, Przemyslaw Joniak, Alice Xiang International Conference on Computer Vision (ICCV), 2023 [paper] [arxiv] [code] We measure apparent skin color, beyond a unidimensional scale with the luminance and hue angle. |

|

Orestis Papakyriakopoulos, Anna Seo Gyeong Choi, Jerone Andrews, Rebecca Bourke, William Thong, Dora Zhao, Alice Xiang, Allison Koenecke ACM Conference on Fairness, Accountability, and Transparency (FAccT), 2023 [paper] [arxiv] [code] We augment datasheets to encourage ethical considerations in speech datasets. |

|

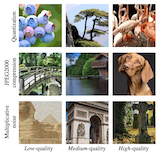

William Thong, Jose-Costa Pereira, Sarah Parisot, Ales Leonardis, Steven McDonagh British Machine Vision Conference (BMVC), 2022 [paper] [arxiv] [code] We enrich image comparisons for learning a perceptual quality metric. |

|

William Thong and Cees G. M. Snoek ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM), 2022 [paper] [arxiv] [code] We create a diverse set of labels from instance, attribute and category similarities for visual product search. |

|

William Thong, Cees G. M. Snoek British Machine Vision Conference (BMVC), 2021 [paper] [arxiv] [code] We identify and mitigate biases in both feature and label embedding spaces in image classifiers. |

|

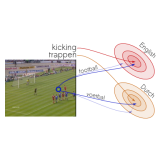

Pascal Mettes, William Thong, Cees G. M. Snoek International Journal of Computer Vision (IJCV), 2021 [paper] [arxiv] [code] We derive spatial and semantic priors to recognize unseen actions in videos with zero training sample. |

|

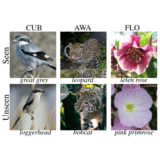

William Thong and Cees G. M. Snoek British Machine Vision Conference (BMVC), 2020 [paper] [arxiv] [code] [video] We mitigate the classifier bias towards classes seen during training in generalized zero-shot learning. |

|

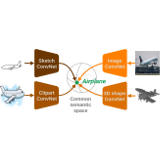

William Thong, Pascal Mettes, Cees G.M. Snoek Computer Vision and Image Understanding (CVIU), 2020 [paper] [arxiv] [code] We search for categories from any source domain to any target domain in a common semantic space. |

|

Mehmet O. Turkoglu, William Thong, Luuk Spreeuwers, Berkay Kicanaoglu AAAI Conference on Artificial Intelligence (AAAI), 2019 [paper] [arxiv] [poster] [code] We compose a scene layer-by-layer, with an explicit control over the generation of all scene elements. |

|

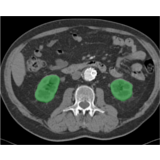

William Thong, Samuel Kadoury, Nicolas Piché, Christopher J. Pal CMBBE: Imaging & Visualization, 2018 [paper] – initially presented at MICCAI-DLMIA 2015 We segment healthy and abnormal kidneys in CT scans with a patch-based ConvNet. |

|

William Thong, Stefan Parent, James Wu, Carl-Éric Aubin, Hubert Labelle, Samuel Kadoury European Spine Journal (ESJ), 2016 [paper] We cluster scoliotic spine deformations in 3D representations with a stacked auto-encoder. |

|

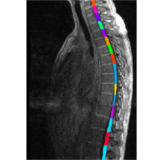

Eugénie Ullmann, Jean François Pelletier Paquette*, William Thong*, Julien Cohen-Adad International Journal of Biomedical Imaging (IJBI), 2014 [paper] We build a template to predict vertebral levels in MRI images. |

|

|

|

LaChance* and Thong* et al. AAAI workshops, 2023. |

|

Gu et al. CVPR workshops, 2021. |

|

Ibrahimi et al. ACM Multimedia (Demo track), 2019. |

|

Thong et al. MICCAI-CSI workshop, 2014. |

|

Cohen-Adad et al. OHBM, 2014. |

|

Reviewer for CVPR, ECCV, ICCV, NeurIPS. Outstanding reviewer awards at CVPR'21 and BMVC'20 and 21. |

|

Webpage template from Jon Barron. |